Key challenges with managing data pipelines

5 August 2022 | Noor Khan

Data is constantly evolving and growing this directly impacts the complexities of data pipelines as they aggregate and transform and transfer the data from source to destination. As the number of data sources grows, businesses are looking to build secure, robust and scalable data pipelines to collate their data to get the full picture. Data visibility can generate incredibly valuable outcomes for businesses, as little as a 10% increase in data visibility and the result is more than $65 million in net income for Fortune 1000 companies, according to Richard Joyce, the Senior Analyst at Forrester. Therefore businesses could benefit significantly from robust data pipelines that contribute massively to overall data visibility.

We have covered what to look out for when you are building a scalable data pipeline. Here, we will cover what are the key challenges when it comes to managing data pipelines and how you can overcome them.

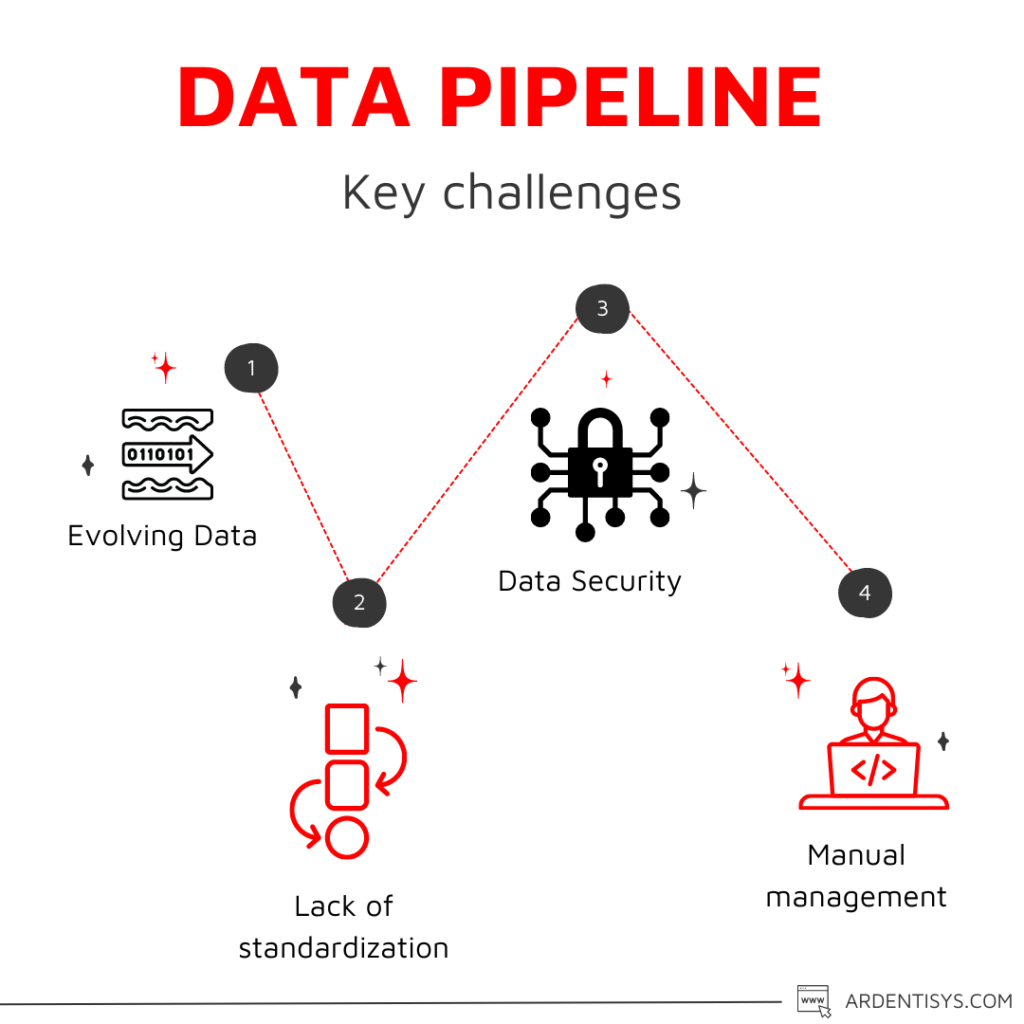

Evolving data

One of the key challenges you may face with effectively managing data pipelines is the evolving data. A business will have multiple sources and a variety of data, and this will continue to change and evolve over time. This can present challenges when it comes to processing this data and carrying it to the destination, whether that is to a data warehouse or a data lake.

Below we will look at the three main points of evolving data.

Data volume

Scalability should be a key component of good data pipelines. If you have scalable data pipelines in place, then they should be able to deal with large volumes of data coming in from multiple sources. Scalable data pipelines will be built with the change of data volume in mind so that it can easily be processed with speed and efficiency.

Read the success story on how our highly skilled engineers built a 10 TB data lake for survey and near-real-time social media data

Data velocity

The velocity at which data is coming in also plays a huge part. You will need to establish how often and at what speeds will the data be coming in. The velocity of real-time data will differ from data coming in at select intervals.

To effectively deal with this challenge, data pipelines will need to be built with scalability in mind so they can keep up with evolving data, this involves both selection of technologies employed and the infrastructure of the data pipeline. At Ardent, we have delivered many data pipeline solutions to ensure they are future-proof and can keep up with changes in our client's data.

Data sources

The number of data sources you are collating data from will most likely evolve. You will need to consider how these will impact your data pipelines and if your data pipelines are built to handle this type of data.

Read our client's success with thier highly sophisticated data indexing tool to process a variety of data

Lack of standardization

Data coming in from multiple sources with multi-users will lack standardization, this is one of the processes that will transform the data in the data pipeline. However, data that is vastly different and lacks any kind of standardization will require mapping the data into a common format, which can be a complex process when managing data pipelines.

Data security

When dealing with data whether it is flowing through data pipelines or is stored in a database, utmost data security has to be in place. When it comes to dealing with data from a variety of sources with multi-user access, there can be a risk to data security. Data security is especially important when you are dealing with sensitive or confidential data, therefore the data needs to be located and catalogued and then privacy controls need to be applied.

Read about being ISO 27001 certified and what it means for data security.

Manual management of data pipelines

Manual management of data pipelines can be costly, time-consuming and prone to errors. Therefore, when you are managing data pipelines, automation should be at the heart of it. Automated data pipelines can save time and resources in the long run whilst mitigating risks of error.

Peace of mind with data pipelines managed by Ardent

Managing a complex data pipeline can be a time-consuming and costly venture, especially if you do not have the right skills and expertise in-house. At Ardent, our highly skilled and experienced data engineers have worked with a wide variety, volumes and velocities of data to deliver secure, scalable and efficient data solutions. If you are looking to outsource your data pipeline development or data pipeline management, then get in touch to find out how we can help.

Our clients succeed with effective data pipeline management

Improving data turnaround by 80% with Databricks for a Fortune 500 company

Explore data engineering services or data pipeline development services.

Ardent Insights

Are you ready to take the lead in driving digital transformation?

Digital transformation is the process of modernizing and digitating business processes with technology that can offer a plethora of benefits including reducing long-term costs, improving productivity and streamlining processes. Despite the benefits, research by McKinsey & Company has found that around 70% of digital transformation projects fail, largely down to employee resistance. If you are [...]

Read More... from Key challenges with managing data pipelines

Stateful VS Stateless – What’s right for your application?

Protocols and guidelines are at the heart of data engineering and application development, and the data which is sent using network protocols is broadly divided into stateful vs stateless structures – these rules govern how the data has been formatted, how it sent, and how it is received by other devices (such as endpoints, routers, [...]

Read More... from Key challenges with managing data pipelines

Getting data observability done right – Is Monte Carlo the tool for you?

Data observability is all about the ability to understand, diagnose, and manage the health of your data across multiple tools and throughout the entire lifecycle of the data. Ensuring that you have the right operational monitoring and support to provide 24/7 peace of mind is critical to building and growing your company. [...]

Read More... from Key challenges with managing data pipelines